Key Takeaways

-

Ahrefsbot is an SEO crawler that gathers information to fuel SEO tools and assists you in evaluating websites and their online footprint.

-

By giving ahrefsbot access to your site, you improve your site’s visibility and receive useful competitive and content insights.

-

By tracking Ahrefsbot’s activity in your server logs and analytics tools, you can ensure that you understand its impact on your website’s performance.

-

Controlling Ahrefsbot via robots.txt or crawl-delay command assists in managing its access and minimizing unneeded server strain.

-

Consider the potential ramifications, like added server stress, distorted statistics, or security risks from intensive crawling.

-

Transparent web crawling honors privacy and site policies. Therefore, it is crucial to have a balance between discoverability and ethical data gathering.

AhrefsBot is a crawler from Ahrefs, an established SEO toolkit trusted by SEOs and agencies globally. Crawling billions of pages daily, AhrefsBot gathers information about websites, backlinks and keywords, enabling users to study search rankings and competitor tactics.

The bot’s activity is frequently seen in server logs, which makes it familiar to webmasters and SEO professionals everywhere. Understanding it is crucial for anyone handling site presence or SEO analytics.

What is Ahrefsbot?

Ahrefsbot is a focused crawler, programmed to crawl the web and power Ahrefs’ link index. It’s similar to the famous Googlebot, except it collects SEO data. Its crawling is at the core of the tools offered by the Ahrefs platform, allowing professionals to audit backlinks, keyword targets, and content strategies worldwide.

1. Its Purpose

At its heart, Ahrefsbot is similar to an active web crawler. It crawls and catalogs information about web pages with a special focus on backlinks, which are vital for effective SEO work. This data then feeds straight into Ahrefs’ analytics tools, allowing agencies, consultants, and site owners to leverage the capabilities of the site audit tool to analyze competitor link profiles or plan their own SEO campaigns.

The info collected by Ahrefsbot guides link-building, keywords, and content optimization decisions, bringing actionable insights right to the doorsteps of digital marketers. For anyone managing a website or digital marketing campaign, the purpose is straightforward: Ahrefsbot gathers the raw materials that drive competitive analysis and SEO strategy.

By charting how sites link to one another across the web, it helps users identify opportunities, threats, and trends that would be otherwise invisible, enhancing the overall website performance.

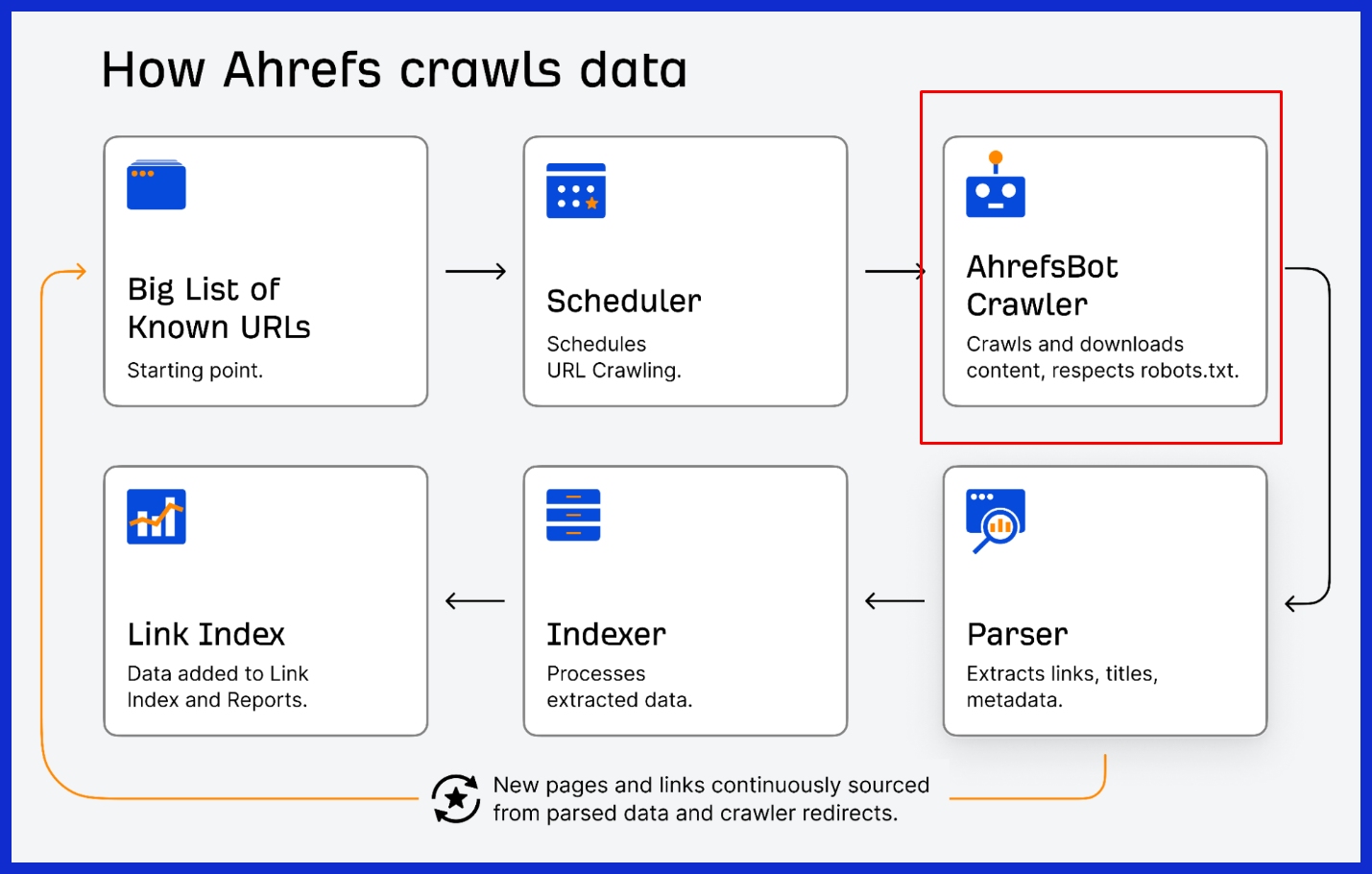

2. Its Process

Ahrefsbot crawls websites through HTTP requests to web servers. Like a browser, it downloads pages and extracts information. It honors robots.txt directives, allowing site owners to dictate what should be crawled.

Like Googlebot, it crawls by following links between pages, but its crawl rate and depth are optimized for SEO data rather than pure search engine indexing. The bot processes humongous amounts of data, parsing everything from anchor text to HTTP headers.

Its crawl can consume server resources and occasionally affect site performance if left unchecked. That’s why webmasters are empowered to set crawl delays or block Ahrefsbot outright if necessary.

3. Its Data

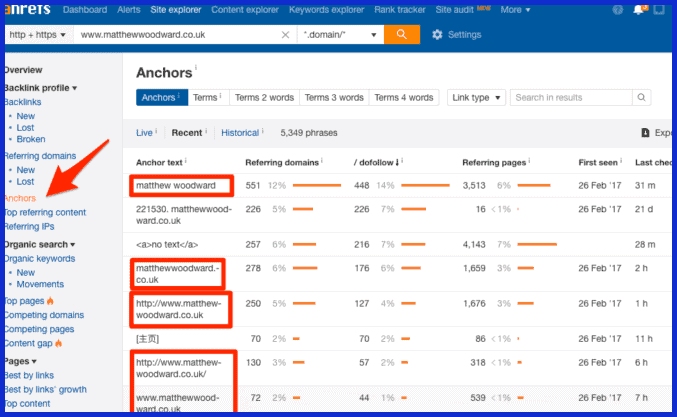

The main information Ahrefsbot grabs are links—internal, external, dofollow, nofollow—and their context. It collects data about keywords, page content, and site structure. This data powers Ahrefs’ backlink profile tracking, keyword ranking analysis, and content gap suggestions.

SEO pros take that data to benchmark against rivals, optimize their own link-building efforts, and polish on-page content. The depth and currency of Ahrefs’ index are directly related to the scale and effectiveness of Ahrefsbot’s crawling.

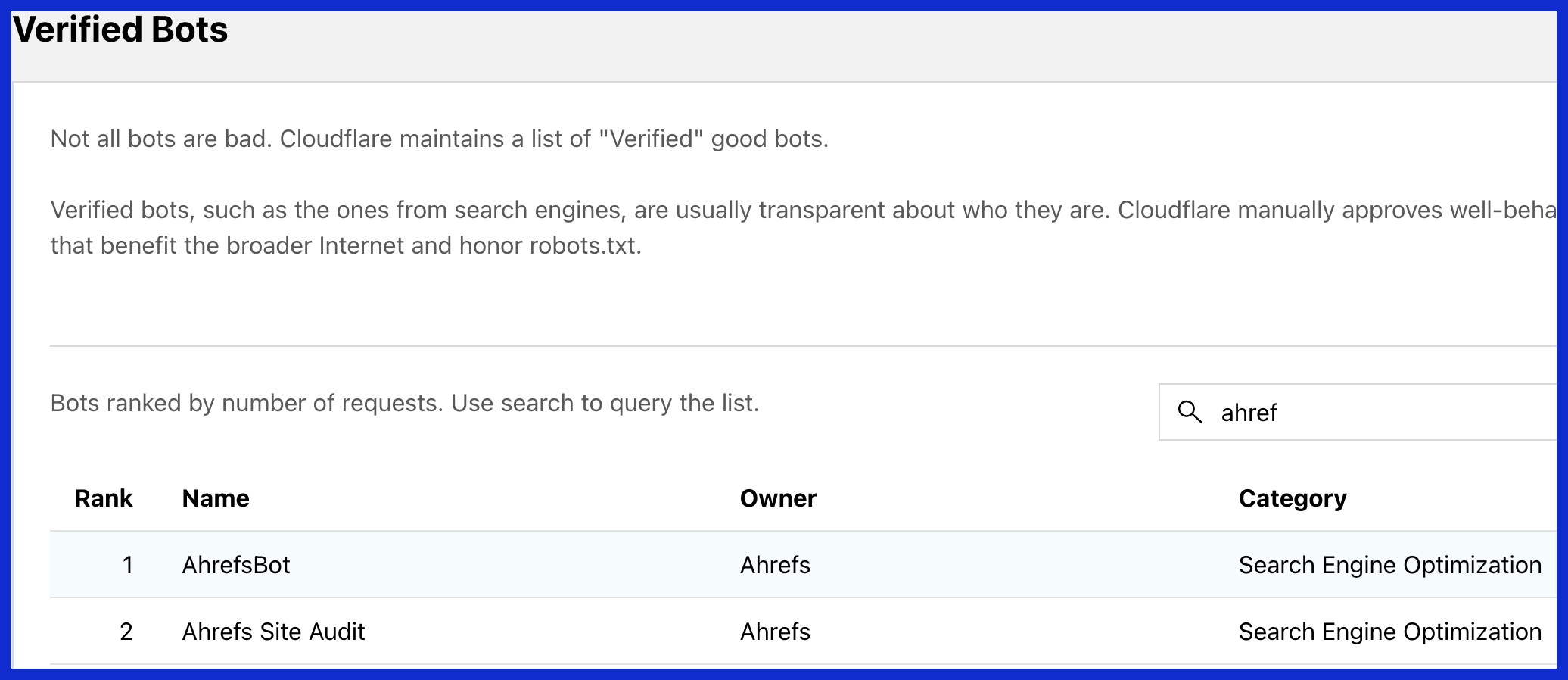

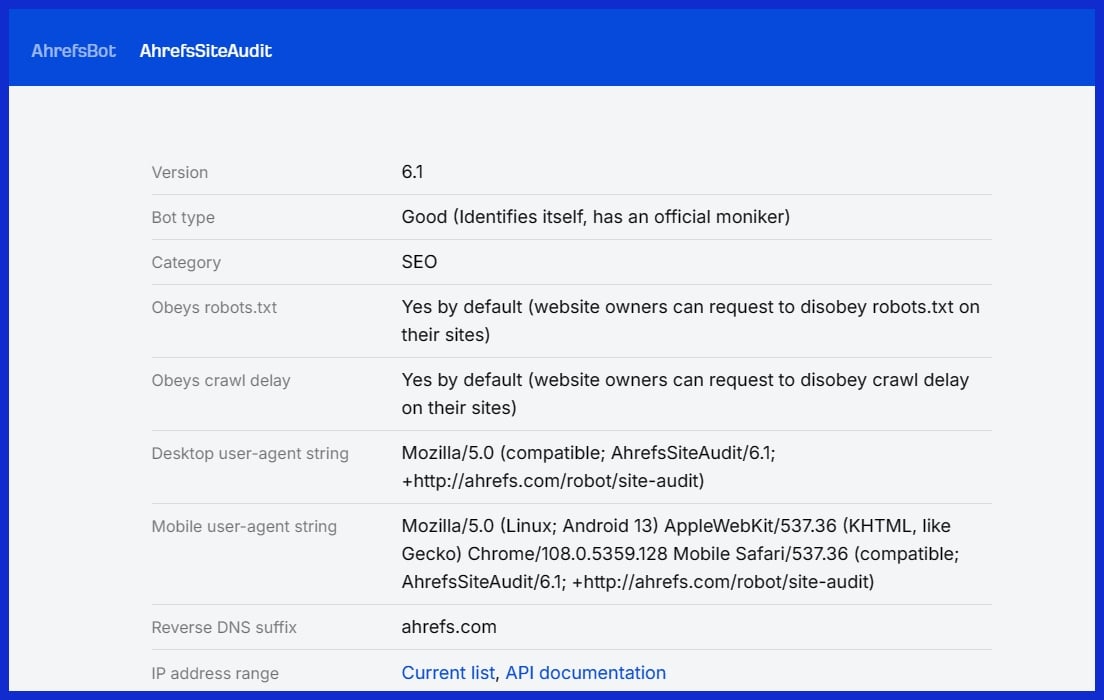

4. Its Identity

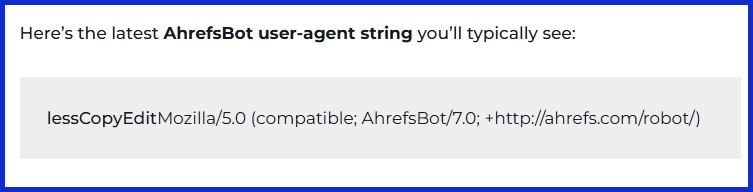

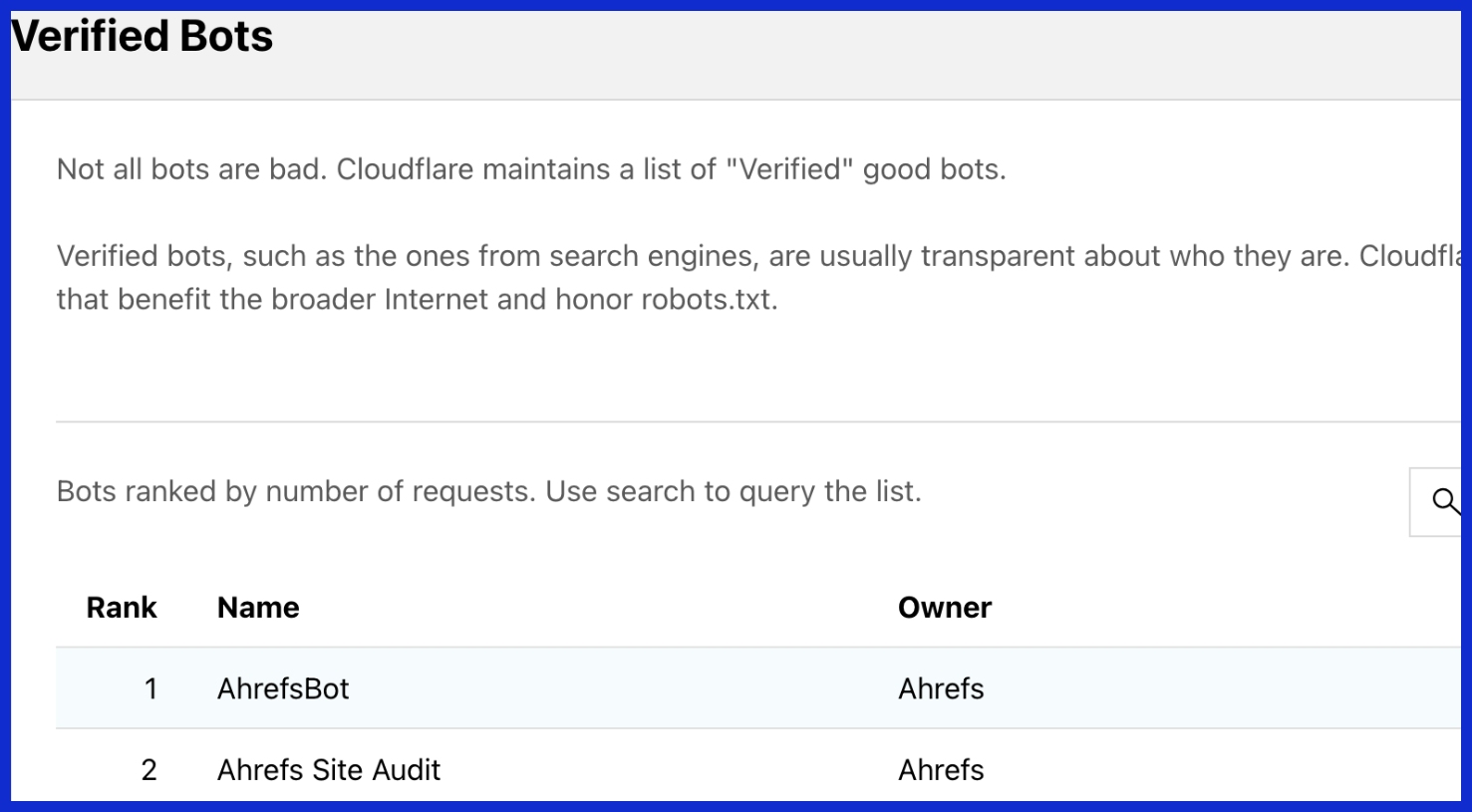

Ahrefsbot self-identifies with a specific user-agent string so its requests are identifiable in server logs. This openness enables site owners to track, report, or block its activity if they wish. We believe the bot’s compliance with standards such as honoring robots.txt and crawl-delay demonstrates a commitment to ethical crawling, minimizing disruption to web infrastructure.

Instead of sneaking around or ignoring webmaster instructions like other bots, Ahrefsbot’s identity is always transparent, putting the power back in the hands of those managing the sites. Site owners who value their privacy need no longer worry. They can opt the bot out with ease, keeping their resources and data secure.

Why Allow Ahrefsbot?

Allowing Ahrefsbot to crawl your site isn’t merely permitting one more bot to prowl; it’s a strategic move for site owners and SEO professionals who want trustworthy, timely data. This active web crawler updates its index every 15 to 30 minutes, ensuring your site’s profile, backlinks, and crawl data remain fresh in our fast-paced web environment.

Digital Visibility

By allowing Ahrefsbot to crawl your site, your pages are indexed in one of the world’s biggest third-party link databases. Your new articles, modifications, and updates are exposed not only to Ahrefs users but also to the wider digital intelligence. If you care about SEO, you need this vision.

Search engines are signals, and the more complete and precise your link data, the better you are equipped to react when algorithms shift or rankings suddenly drop. For instance, if you roll out a new landing page or land a premium backlink, Ahrefsbot’s frequent crawls guarantee those updates are recorded and displayed nearly instantly in their data. That is real-time intelligence you can leverage.

Competitive Insight

Allowing Ahrefsbot isn’t just beneficial for your own site; it also enhances your competitive research capabilities. By using Ahrefs, you gain a behind-the-scenes look at your competitors’ strategies. The Ahrefs SEO tools collect both internal and external link profiles, enabling you to identify where competitors acquire links, which content attracts the most traction, and how their keyword rankings evolve.

For agencies and consultants, this is gold. You can benchmark your site’s performance, identify gaps, and design campaigns that leapfrog competitors by targeting their weaknesses. This is not just theoretical. If you find that a competitor’s product guide is generating dozens of high-authority links, you can build something better, promote it, and steal those links.

The secret lies in having fresh, quality data, and the Ahrefs site audit crawler provides that with its regular updates. You can monitor shifts in the link landscape as they happen, ensuring there are no surprises.

Content Discovery

Ahrefsbot uncovers every nook and cranny of your website. Content that is buried in your site architecture or behind convoluted navigation is found and indexed. This is significant for big sites, where crucial pages can occasionally fall through the cracks and never appear in the search results.

Ahrefsbot’s crawl reports show you precisely what pages are found and what are not, allowing you to address issues before they lose you organic traffic. You gain insight into how search engines and your competition view your site architecture.

Potential Downsides

All tools have their idiosyncrasies, and AhrefsBot is no different. As an active web crawler for SEO intelligence and site audit, it presents new obstacles that even experienced digital marketers and technical SEOs ought to heed. Understanding these downsides helps teams make smarter decisions balancing insight and infrastructure when deploying or blocking the ahrefsbot.

Server Load

AhrefsBot operates with an aggressive crawling schedule, which can significantly impact server performance for website owners, especially those on limited server bandwidth or shared hosting. When AhrefsBot comes knocking, it’s not simply peering at a page or two; it’s typically traversing hundreds or thousands of URLs in one go, much like an active web crawler on a mission. This can lead to crawl bursts that result in visible slowdowns, affecting the overall website performance.

Imagine a busy coffee shop that’s been invaded by a tour bus. Real site visitors may end up waiting longer for pages to load, resulting in a poor user experience and potentially hurting conversions. For smaller sites or resource-constrained platforms, the aggressive crawl rate of AhrefsBot can quickly consume bandwidth quotas, leading to unexpected expenses from hosting providers who charge overage fees.

Even larger organizations face challenges with excessive server strain. Some webmasters respond by throttling or outright blocking AhrefsBot, particularly if their site’s SEO strategies do not heavily favor Ahrefs’ ecosystem. While blocking the crawler can help improve site speed, it also limits the insights available in Ahrefs’ reports, which are valuable for effective SEO work.

Data Skew

AhrefsBot’s data is only as rich as what it can crawl. This means if a site blocks their bot—on purpose or inadvertently—its backlink and keyword data can become incomplete or stale within Ahrefs’ interface. This creates blind spots for the site owner and anyone attempting to reverse engineer the site’s SEO footprint.

For extremely specialized sectors, where niche-specific keyword trends trump general data, Ahrefs alone can provide a misleading sense of certainty. The platform’s overwhelming quantity of reports and analytics is powerful but can swamp users seeking targeted answers, particularly if all they want is information for something like keyword research, not an in-depth site audit.

Over-reliance on Ahrefs’ backlink index can mislead. Backlink data is king, but it’s only half the SEO battle. If a competitor’s site blocks AhrefsBot, their link profile may appear unnaturally sparse. This can bias competitive research and even result in misinformed strategic choices.

Security Concerns

Webmasters occasionally fret over revealing too much technical information to crawlers such as AhrefsBot. The bot itself isn’t harmful, but its crawling habits can accidentally expose structural vulnerabilities, directory paths, or sensitive endpoints if unhandled.

For firms in finance, healthcare, or gambling, even a bare-minimum misconfiguration could expose avenues to more devious targeting. Data privacy is a consideration. Ahrefs compiles huge amounts of data, and although its resources are excellent, some customers require direct support for nuanced security questions.

Response times can lag, particularly for users outside the support team’s core operating hours, which leaves holes during essential troubleshooting moments.

How to Manage Ahrefsbot

Handling ahrefsbot effectively involves establishing clear limits for how this active web crawler visits your site. As a popular tool among SEO professionals for site audits and backlink analysis, Ahrefsbot provides valuable insights while requiring a balance to minimize the drain on your server resources, particularly for high-traffic or content-heavy websites.

Robots.txt File

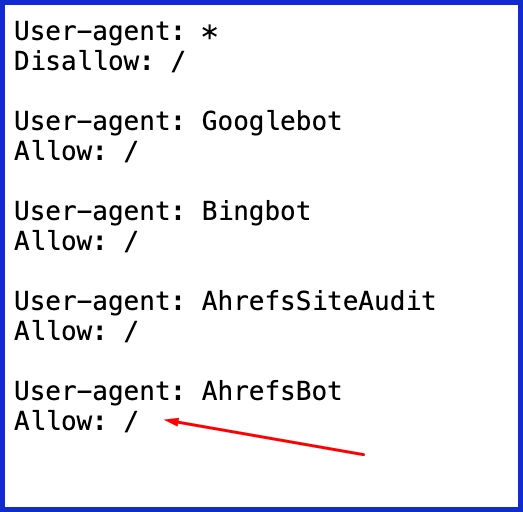

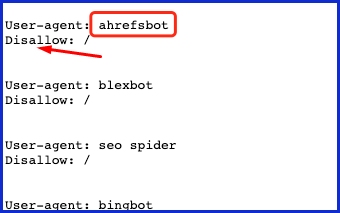

Your robots.txt file should be the first line of defense. It’s just a text file in your website’s root directory, serving as a crawler’s polite request. Ahrefsbot, for example, obeys these rules by default.

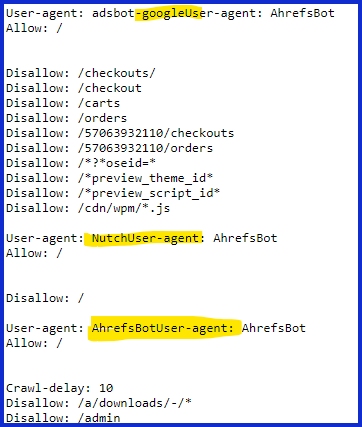

Adding User-agent: AhrefsBot followed by Disallow: /private-folder/ prevents Ahrefsbot from crawling specific parts of your site. It’s a neat method to protect sensitive folders or resource-intensive pages without impacting broad visibility.

Permitting Ahrefsbot in robots.txt keeps your site’s backlink information in Ahrefs up to date. This is particularly handy for agencies and SEOs who depend on fresh link profiles. You’d probably want to block things like customer accounts or checkout pages from being crawled.

Robots.txt changes are easy and reversible, so that’s a flexible tool for continued management.

Crawl-Delay Directive

Again, if you observe server slowdowns, particularly on eCommerce sites with tens of thousands of URLs, tweaking the crawl-delay is a handy maneuver. Use the crawl-delay directive in robots.txt for Ahrefsbot, which specifies how many seconds the bot should wait between requests.

For example, Crawl-delay: 10 instructs Ahrefsbot to pause ten seconds before each new page. Increasing crawl delay saves server load, but may slow data refresh in Ahrefs. It’s a balancing act.

Too high and you miss new insight, too low and your hosting may take a hit. Most companies configure this from 5 to 20 seconds, depending on their server’s speed and the necessity of real-time data. Measure and monitor your performance after you make changes since the sweet spot depends on your site architecture and traffic patterns.

User-Agent Blocking

Blocking Ahrefsbot by user-agent allows you immediate control. In robots.txt, User-agent: AhrefsBot with Disallow: / blocks all crawling from the bot. For more solid blocking, your .htaccess file at the server level allows you to deny requests based on user-agent or IP.

For example, Deny from 51.222.152.133 or Deny from 54.36.148.1 in .htaccess stops known Ahrefsbot IPs cold. Allow from all gets everyone else through.

Good luck, and do verify legit Ahrefsbot visits. Here is their public user-agent string and IPs to check against your logs. This stops impersonators from forging the crawler to dodge your guidelines.

Server-level blocks are more aggressive and cannot be overridden by the bot, which is good for sites with strict privacy or bandwidth requirements.

Conclusion

Ahrefsbot is a significant web crawler behind the scenes of one of the most reliable SEO tools available. Allowing it into your site provides tangible rewards, including fresh backlink information, improved keyword data, and a more comprehensive understanding of your site’s exposure. Sure, every bot has its quirks. It can sometimes consume server resources or crawl more than you want.

It’s easy to manage Ahrefsbot with robots.txt, and most site owners consider the trade-offs well worth it. At the end of the day, knowing how Ahrefsbot works and where it fits in the digital ecosystem enables you to make smart decisions about your site’s visibility and performance—especially when paired with consistent SEO practices from trusted teams like SERPninja.

Frequently Asked Questions

What is Ahrefsbot?

Ahrefsbot is a web crawler developed by Ahrefs. It crawls sites to gather information for Ahrefs’ SEO software, assisting in the examination of backlinks, keywords, and site metrics.

Is Ahrefsbot safe for my website?

Yes, Ahrefsbot is mostly safe as it complies with industry standards for crawling and does not damage sites, making it a reliable SEO tool for professionals.

Can I block Ahrefsbot from crawling my site?

Yes, you can block the Ahrefsbot with robots.txt by adding a disallow rule if you don’t want your site audit performed.

Why should I allow Ahrefsbot to crawl my website?

Permitting Ahrefsbot, an active web crawler, enables your website to be included in Ahrefs’ database, making it accessible to SEO professionals and site owners researching backlinks.

Does Ahrefsbot affect site speed?

Generally, the Ahrefsbot, a powerful SEO tool, does not significantly impact site speed, although it may consume additional bandwidth on smaller servers.

How do I identify Ahrefsbot in my logs?

Ahrefsbot is shown as ‘AhrefsBot’ in web server logs. You can verify via the user agent string.

Is it ethical for Ahrefsbot to crawl my website?

Ahrefsbot, an established SEO crawler, abides by robots.txt and industry standards, ensuring ethical data handling for site owners.