Key Takeaways

-

Embeddings convert intricate, high-dimensional data into simplified, lower-dimensional vectors, allowing AI systems to efficiently process and analyze information.

-

These vector representations maintain important relationships in the input data, enabling models to capture meaning, context, and similarity between entities.

-

Both static and contextual embedding models have their strengths. Selecting the appropriate one depends on your objectives and your data.

-

Embeddings aren’t just for text—they drive image recognition, audio classification, and combine different data types for deeper understanding.

-

Efficient embeddings simplify machine learning pipelines, allowing for quicker training, enhanced precision, and scalable applications across industries.

-

Beyond powering ChatGPT, embeddings supercharge recommendation systems, search engines, and other AI-powered apps with more personalized, relevant results.

An embedding in AI is a mathematical depiction of information, such as text or visuals, that allows computers to grasp intricate data in an easier format.

These embeddings convert real-world content into vectors, which allows algorithms to more readily identify patterns, calculate similarity, and accomplish tasks such as language translation or image recognition.

Embeddings are crucial to grasp if you’re dealing with machine learning models, because they underpin contemporary AI use cases.

What are AI Embeddings?

AI embeddings are such continuous vector representations, which convert complex, often high-dimensional data—words, images, user behaviors—into a more tractable lower-dimensional representation. This maintains important relationships and structure such that crucial patterns are preserved, even when the data gets compressed.

These embeddings serve as a bridge, allowing models to understand and operate on unstructured data effectively, essential for delivering value from the noisy, real-world data we all grapple with.

1. The Translation

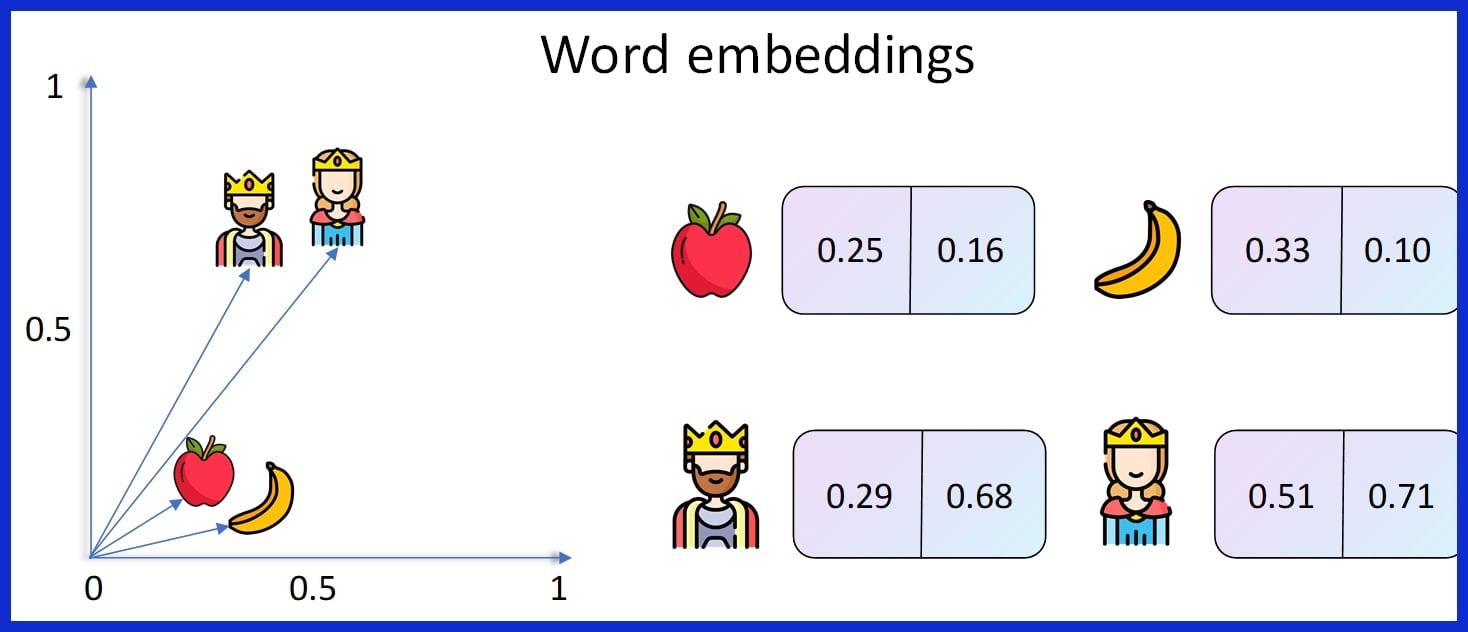

Embeddings convert everything from language to pictures into a collection of numbers — vectors — that an algorithm can understand. Every entity — be it a word or a product — gets mapped to a distinct vector in this new space.

This mapping is important since it reduces an ungainly heap of raw information into something a model can consume. Complex relationships, like synonyms in language or similar items in e-commerce, are easier to spot. For instance, in Word2Vec, the words ‘king’ and ‘queen’ end up near each other, capturing gender and royalty in their locations.

Translation via embeddings implies that machine learning models can at last ‘sense’ relationships among objects, enhancing their capacity to categorize, suggest, or group with improved precision.

2. The Vector

Vectors are the meat and potatoes of embeddings—simple numerical arrays, but every orientation and distance in vector space narrates meaning. They enable similarities between data points to be computed directly, with metrics such as cosine similarity or Euclidean distance.

The higher the number of dimensions, the more nuance can be captured in a vector — at the cost of increased computation. Vector databases such as FAISS or Pinecone then store these vectors in a way that enables systems to retrieve similar items rapidly, essential for real-time recommendations or search.

3. The Context

Embeddings aren’t just numeric—they encode context, as well. Language models, for example, a word’s definition varies based on its context. Methods like BERT or ELMO capture these subtleties, so “bank” in “river bank” and “bank account” have distinct vectors.

This context-awareness helps AI systems understand intent, sentiment, and relationships, rendering results more accurate and relevant. In practice, this translates to improved text classification, clever chatbots, and more intuitive translator engines.

4. The Space

Embedding space (known as latent space) reduces computational overhead by transforming massive datasets into small, structured vectors. This, in turn, makes just about everything from clustering to anomaly detection more efficient.

Embeddings enhance model generalization by clustering related data, allowing machine learning algorithms to recognize patterns even in unseen contexts. They enable cross-modal search—think searching for images via text, or vice versa—by embedding all types of data into the same space.

Feature extraction is a breeze, and downstream tasks can be faster, smarter.

Why Embeddings Matter

Embeddings lie at the center of powerful AI — they distill high-dimensional raw data into compact, structured vectors that machine learning models can actually process. They’re essential for transforming a wall of text, an image, or even a catalog of product listings into something structured but still valuable. By utilizing custom embeddings, you can efficiently manage complex data types and enhance the overall performance of the AI model. Learning platforms like Coursiv can help you understand embeddings in depth and how to apply them effectively in real-world AI projects.

When you distill data this way, you cut computational overhead—less data bloat means quicker processing, less resource consumption, and models trainable on actual hardware. Smaller embeddings, in other words, allow for less training time, which is key for companies deploying models at scale or with rapid iterations.

Embeddings make AI scalable — so you don’t need a football field-sized data center just to power a decent search engine.

Efficiency

Embeddings enhance model efficacy by identifying underlying connections. Words such as “bank” (the financial institution) and “bank” (the side of a river) get located in separate positions in the vector space, enabling models to infer meaning from context.

This is important in classification, retrieval, or clustering tasks. Embeddings enable machines to cluster similar things, suggest related content, or provide more relevant search results. For instance, an embeddings-powered blog might recommend articles you care about, not just what comes next in time.

Performance

With embeddings, models don’t just read data—they grasp subtlety. They differentiate between “apple”, the fruit, and “Apple”, the tech company, allowing a search engine to dish up recipes or product reviews accordingly.

This capacity to represent fine semantic distinctions implies recommendation systems can push you toward content that fits your tastes, not just what’s in general demand. When you want to cluster articles, embeddings cluster them by actual similarity, not keyword overlap.

RAG uses embeddings in clever ways for question answering – retrieving pertinent passages to improve accuracy. Even in domain-specific search, for example, finding the right faucet tap, embeddings help you float through an ocean of choices and dock where you desire.

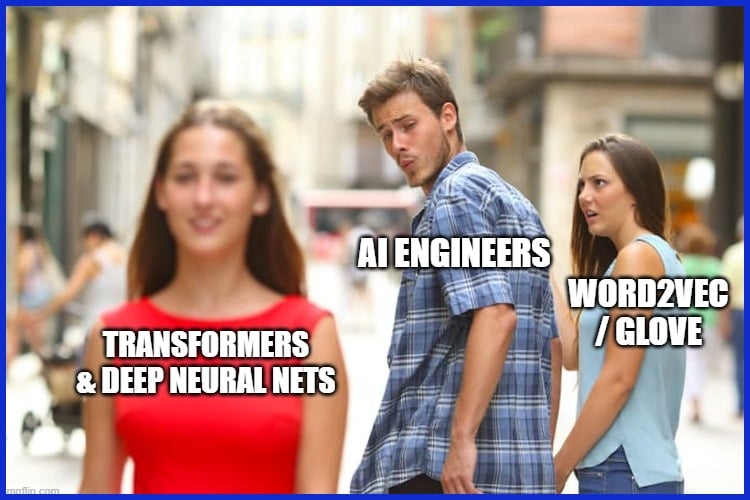

Nuance

Creating embeddings requires machine learning methods such as word2vec, GloVe, or contemporary neural networks like transformers. Your embeddings are only as good as your training data, garbage in, garbage out.

This is why the selection of the best algorithm for your content type (text, images, audio) matters, because it determines how effectively your model can capture relationships. Neural nets, particularly deep ones, excel at encoding complex data, allowing you to compute content similarity, construct ‘related content’ features, or even perform cross-sentence vibe analysis.

Embeddings open a horizon of possibilities to analyze, retrieve, and recommend content, enabling AI systems to become more adaptable and perceptive.

How Embeddings are Created

Embeddings are the backbone of modern AI. They turn messy, unstructured data into vectors (numbers in multi-dimensional space) that machines can understand.

The Basics

-

Embeddings = n-dimensional coordinates

-

Similar items (like synonyms or related images) get grouped close together

-

Useful for search, recommendations, classification, and more

Types of Embeddings

1. Static Embeddings (Word2Vec, GloVe)

-

Each word gets one fixed vector, no matter the context

-

Trained on massive text datasets (like Wikipedia or Google News)

-

Example:

-

“King – Man + Woman ≈ Queen”

-

-

Pros: Fast, efficient, great for simple tasks (e.g., document classification, search)

-

Cons: Can’t handle polysemy (“bank” = riverbank or financial bank — same vector)

2. Contextual Embeddings (BERT, ELMo, Universal Sentence Encoder)

-

Each word gets a different vector depending on context

-

Example:

-

“bass” (music) ≠ “bass” (fish)

-

-

Built using deep neural networks (transformers) trained on massive data

-

Pros: Handles nuance, ambiguity, and synonyms much better

-

Best for tasks like:

-

Sentiment analysis

-

Conversational AI

-

Question answering

-

-

Cons: More computationally expensive

Types of Embedding Models

There are a few main flavors of embedding models, each engineered to convert noisy, high-dimensional inputs into dense, semantically rich vectors. These powerful embeddings are the silent dynamos powering more intelligent search, improved recommendations, and language comprehension. Not just for words—images, audio, and even cities or TV shows all enjoy the benefits of custom embeddings.

Static Models

Static embedding models encode data points as static vectors independent of context. Word2Vec, presented by Mikolov et al., and GloVe are among the most prominent. Both algorithms map words to unique vectors: “bank” gets one vector, whether it’s a riverbank or a financial institution.

These models learn from massive text corpora, capturing generic semantic relationships. For example, “king” – “man” + “woman” brings you close to “queen”. The magic here is that it’s efficient. Static embeddings are quick to train, simple to store, and make similarity searches, such as related products or synonyms, super fast.

Cosine similarity is the de facto metric that measures the angle between two vectors to determine their similarity. Simplicity has trade-offs. Static models are unable to differentiate between the different semantics of the same word in different sentences. If ‘apple’ pops up in a fruit salad recipe and a tech article, the vector won’t move.

This limitation renders static models suboptimal for nuanced tasks.

Contextual Models

Contextual embedding models represent each word differently depending on the words around it. Rather than calculating a static vector, these models—such as BERT and GPT—generate vectors that capture each word’s context. For instance, ‘apple’ in ‘She ate an apple’ and ‘Apple released a new phone’ will have different embeddings.

BERT has become the foundation of natural language processing, powering everything from chatbots to text search and classification. The advantage in this case is depth. Contextual models have a sense of meaning in context, capturing nuanced relationships and excelling at more difficult tasks like sentiment analysis or question answering.

They aid recommendation systems where user preferences evolve. These heavier models provide a leap in accuracy and flexibility over static approaches.

Embeddings Beyond Text

Embeddings go far beyond words. They can represent text, images, audio, and even structured data as vectors in a shared space. This lets AI compare and connect very different types of information, revealing relationships that raw data alone can’t capture.

Today, embeddings power recommendations, smarter search, and personalization. From streaming platforms suggesting shows, to online stores recommending products, to healthcare systems spotting similar patient cases—embeddings make AI more adaptable and relevant across industries.

Real-World Applications

Embeddings are what make modern AI feel smart and useful. They take raw information—like text, images, or audio—and turn it into structured representations that algorithms can understand. This unlocks connections and insights that wouldn’t be obvious from the raw data.

Where You See Them in Action

-

Recommendations: Streaming platforms suggest shows, or online stores recommend products, by comparing embeddings of your history with similar items.

-

Search: Modern search engines use embeddings to go beyond keywords—helping you find “a cat with sunglasses” even if those words don’t appear in the file name.

-

Data Grouping: Customer reviews, medical records, or financial transactions can be clustered by meaning, not just exact wording.

-

Images & Audio: Find visually similar photos or related music clips, even when file names don’t match.

Across industries—entertainment, healthcare, e-commerce, or education—embeddings power smarter recommendations, better search, and faster insights. They help bring order to massive, messy datasets and make AI feel more natural and human-like.

Conclusion

Embeddings are like the hidden engine that makes AI feel smart. They quietly turn words, images, and even sounds into something machines can understand—so messy data becomes meaningful, useful, and even a little intuitive.

They’re behind the recommendations you see, the search results that feel spot-on, and the image recognition that just works. By connecting raw data with real understanding, embeddings power some of the most exciting AI applications today.

At SERPninja.io, we help brands tap into these advances so their content isn’t just seen, but truly discovered in an AI-first world. Staying on top of how embeddings evolve isn’t just a technical detail—it’s the difference between keeping up and leading the way.

Frequently Asked Questions

What is an embedding in AI?

An embedding in AI, often referred to as a vector representation of data, such as words or images, aids machines in understanding the semantic information and meaningful relationships within complex data types.

Why are embeddings important in AI?

Embeddings enable AI models to handle and juxtapose information more effectively, utilizing powerful embeddings to encode semantics, context, and relations, which enhances performance on tasks like search and recommendation.

How are embeddings created?

Embeddings were generated using powerful embeddings from machine learning models trained on big data, which learn to place similar entities nearby in a dimensional embedding space.

What types of embedding models exist?

Popular among them are text embeddings, image embeddings, and graph embeddings, each designed for specific data types, such as unstructured data like text or images.

Can embeddings be used for more than just text?

Yes, embeddings, such as text embeddings and image embeddings, are crucial for AI in comprehending and connecting various types of unstructured data.

What are some real-world uses of embeddings?

Embeddings, such as text embeddings and ai embedding models, are behind search engines, recommendation systems, and language translation, enhancing precision and user engagement across countless domains.

Are embeddings limited to English or specific languages?

No, we can generate custom embeddings for any language. These embeddings assist AI models in comprehending and managing world data, enabling multilingual purposes.