Key Takeaways

-

Chain of Thought Reasoning enables AI to break down complex problems into explicit, manageable steps, yielding more precise and clear results for users worldwide.

-

This incremental process reflects human problem-solving, enabling people with varying experience and expertise to follow and have confidence in AI reasoning.

-

Advanced prompting and feedback loops help refine AI’s reasoning abilities, so it’s always getting better.

-

Chain of Thought Reasoning enables AI to tackle complex problems more reliably in domains such as mathematics, logic puzzles, and creative work.

-

Self-correction and retrospective thinking make AI learn from mistakes, leading to improved accuracy and wiser choices as time goes on.

-

By transcending pattern matching, this technique nudges AI into the realm of complex reasoning and might represent a pivotal stage toward more general humanlike intelligence.

In chain of thought reasoning, every step is constructed upon its predecessor, resulting in a well-defined logical progression from question to solution. In domains such as AI and education, this approach facilitates clear, incremental reasoning that renders difficult tasks both more comprehensible and more verifiable.

As you’ll see, mastering chain of thought reasoning allows professionals to dismantle challenges, optimize decisions, and articulate solutions more effectively. The subsequent sections dive into its applications and advantages.

What is Chain of Thought Reasoning?

Chain-of-Thought (CoT) reasoning is thinking out loud—step by step. Instead of jumping straight to an answer, you break a problem down into smaller pieces, with each step building on the last until you reach a clear solution.

In areas like AI and education, this kind of reasoning is powerful. It turns complicated problems into something easier to follow and double-check, making the whole process more transparent.

By practicing CoT reasoning, people (and AI systems) can tackle challenges with more confidence, make smarter choices, and explain their solutions in a way that just makes sense. In this article, we’ll look at how it works, why it matters, and the real-world benefits it brings.

1. The Core Idea

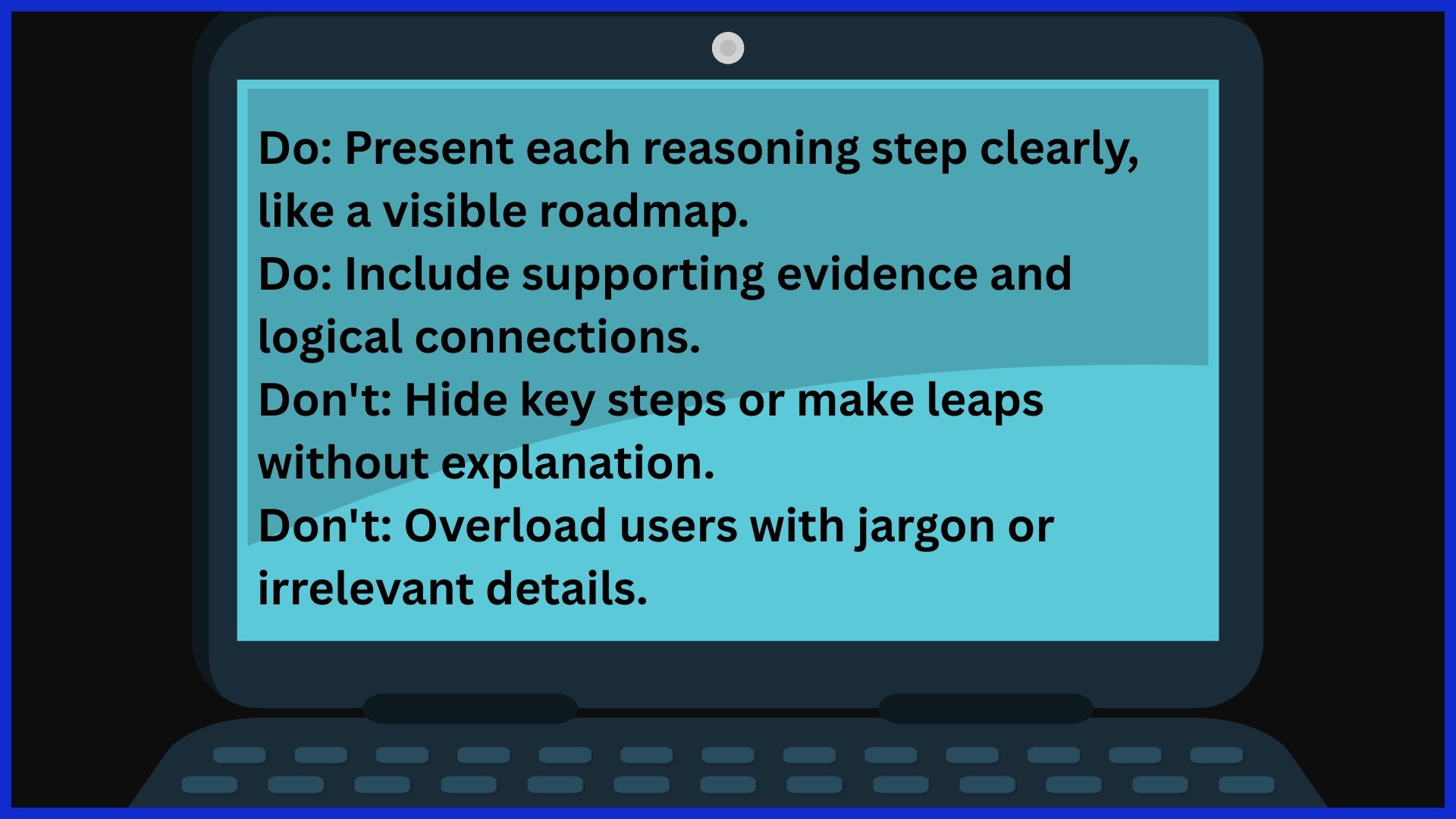

At its core, Chain of Thought Reasoning organizes the way AI models think, promoting a reasoning process over an intuitive jump. This is key for anything that isn’t trivial, like multi-step math or logic puzzles. It allows the model to stop, verify its reasoning at each step, and correct if needed.

Intermediate reasoning steps are important—very important. By explaining each step, AI can identify errors quickly and strengthen reasoning, resulting in more precise responses overall. This structure not only increases performance, but it also renders the whole procedure more transparent and less opaque to end-users.

This move away from black-box outputs to stepwise explanations is fundamental to making AI both more trustworthy and trustworthy.

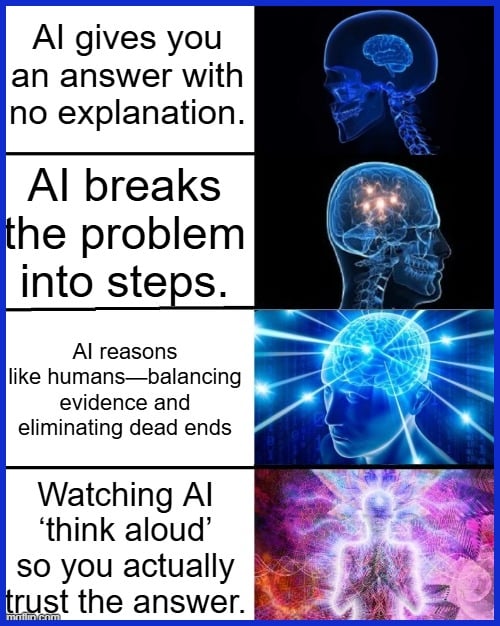

2. The Human Analogy

Humans don’t typically solve large problems in a single pass; instead, we fragment them into manageable chunks through structured reasoning. When you encounter a challenging decision, you balance evidence and think through options, employing reasoning models to eliminate dead ends before concluding. Chain of Thought Reasoning in AI reflects this natural intuition, enabling sophisticated reasoning capabilities that mirror human thought processes.

By emulating human-like reasoning, AI models can make decisions that seem less mechanical and more reliable. This analogy further helps users understand how the AI reached its answer, strengthening trust in the system and eliminating its air of mystery through effective reasoning paths.

Observing an AI ‘think aloud’ de-mystifies its decision-making and makes space for co-creation instead of mere consumption, enhancing the interaction through advanced language models.

3. The Prompting Process

To make the most of CoT reasoning, you begin with a well-defined and organized prompt. Occasionally, you provide the model with specific ‘hints’, specifying the steps to take. Other times, you might provide examples — one-shot or few-shot prompting — to illustrate what a good stepwise solution looks like.

Good prompts help steer the model, making it process the problem step-by-step without jumping ahead. The process is dynamic: if the first response isn’t perfect, you can refine your prompt, nudging the model to dig deeper or clarify its logic.

This iteration is instrumental for generating outputs that are both accurate and interpretable.

How CoT Reasoning Works

Chain of Thought (CoT) reasoning is a trick in AI that asks language models to solve problems stepwise. Rather than producing answers, the model lays out its reasoning, rendering each decision explicit. This method decomposes complex questions into a series of simpler steps, leveraging prompts that serve as priming cues for logical reasoning.

Therefore, methods such as clear prompting, direct supervision, and iterative prompt engineering are at the core of constructing successful CoT systems. CoT reasoning builds confidence in generative AI by illustrating the path to conclusions, helping prevent hallucinations, and improving precision.

Foundational Prompting

Foundational prompting is about setting up strong “base prompts” that guide how an AI thinks. Think of it like the blueprint of a house—it shapes the way the AI breaks down a problem. Instead of rushing to an answer, these prompts encourage the model to reason step by step.

-

Example:

-

Shallow prompt: “What’s the capital of France?”

-

Foundational prompt: “Think step by step about European countries. Which one is France? What’s its capital?”

-

By nudging the model to take its time, you:

-

Avoid shallow, rushed answers.

-

Reduce mistakes or hallucinations.

-

Get clearer, more trustworthy reasoning paths.

This method is especially powerful in areas like solving math problems, analyzing legal cases, or diagnosing medical issues. In each, foundational prompting helps the AI “show its work,” making its answers more transparent and reliable.

Automated Prompting

Automated prompting is when algorithms generate the prompts instead of humans. This makes the process faster, more consistent, and easier to scale—especially for complex reasoning tasks where manually writing prompts would take forever.

-

Why it matters:

-

Handles large volumes of queries.

-

Keeps reasoning consistent across tasks.

-

Adapts and improves over time through feedback loops.

-

-

How it works: The system looks at the model’s output, then tweaks future prompts based on what worked (or didn’t). Over time, this makes the reasoning more accurate.

-

Challenges:

-

Making sure prompts stay relevant and coherent.

-

Preventing shallow or misleading reasoning from being repeated at scale.

-

Even with these hurdles, automated prompting is essential in settings where thousands of questions need clear, step-by-step answers—like research, data analysis, or large enterprise systems.

Why CoT is Effective

Chain of thought (CoT) reasoning is particularly effective for steering AI through logical steps. Instead of providing responses that could simply seem plausible, CoT forces models to demonstrate their effort, detailing how each determination is made. The method has matured, presently facilitating anything from textual outline generation to advanced, multimodal reasoning.

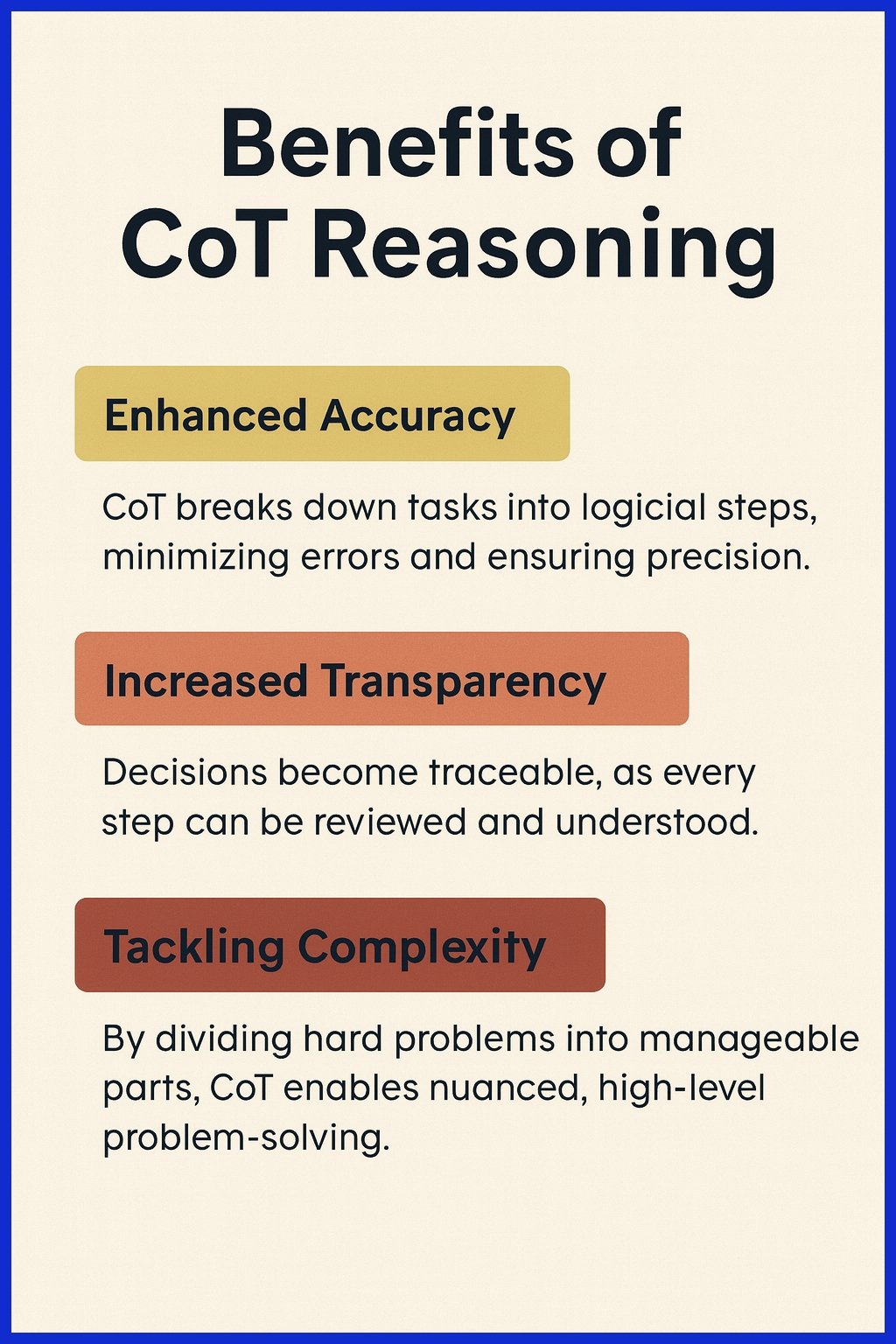

Enhanced Accuracy

By focusing on intermediate steps, it means AI isn’t just guessing. Each piece of reasoning is brought to mind in turn. This systematic process reduces errors, particularly when dealing with tricky or ambiguous problems.

When a model explains its reasoning, such as decomposing a math problem or outlining an argument, the subsequent response is less prone to being out of left field. Thoughtful decomposition results in more reliable AI outputs.

For instance, in financial forecasting or medical diagnosis, overlooking a step can be expensive. CoT’s step-by-step reasoning helps avoid those pitfalls, matching the level of diligence you’d expect from a veteran analyst.

The connection is clear: organized logic equals reliable results. Whether it’s constructing a content outline or cracking a multi-level riddle, CoT-powered systems routinely outpace models that bypass the hard thinking.

Increased Transparency

More importantly, making this reasoning process visible, at least occasionally, goes a long way in building user trust. If people can see how an answer is formulated, they’re more likely to trust and comprehend it.

This openness allows users to identify misconceptions or errors, keeping AI responsible for its content. Reasoning clarity entices users to follow, making AI less of a black box. It establishes a structure for feedback and refinement—essential for high-stakes areas.

Complex Problems

CoT shines brightest when confronted with anything but straightforward problems. By fragmenting a challenge into smaller pieces, the AI can address each piece with attention.

Complex decisions—such as diagnosing a rare disease or planning a multi-stage project—thrive under this structure. Stepwise reasoning allows the model to consider alternative possibilities prior to converging on an answer.

Imagine an AI analyzing visual cues for a safety inspection: CoT allows it to weigh each observation methodically, rather than lumping everything together.

Prompt chaining, a variant of CoT, connects several reasoning steps, rendering large, unwieldy problems tractable. This method is particularly helpful in research, law, or technical debugging, where skipping steps can result in significant oversights.

Advanced CoT Strategies

Advanced Chain-of-Thought (CoT) techniques—like STaR, ToT, and prompt chaining—help AI tackle complex problems step by step. Instead of jumping to answers that “sound right,” these methods make the model explain its reasoning, reducing hallucinations and building user trust.

The challenge: designing these prompts often requires expertise. The payoff: more accurate, reliable, and nuanced responses.

Self-Correction

Self-correction lets AI learn from its mistakes. By reviewing its own outputs, the model adjusts its strategy in future attempts.

-

How it works:

-

AI spots errors in its reasoning.

-

Feedback (from users or QA systems) guides it to improve.

-

The model refines its steps for more accurate results.

-

Example: In math tutoring, if the AI miscalculates, self-correction nudges it to recheck earlier steps until the solution is correct. Over time, this process reduces errors and strengthens explanations.

Retrospective Thinking

Retrospective thinking means AI looks back at past reasoning to see what worked and what didn’t. By reflecting on earlier outputs, the model improves how it handles future tasks.

-

Example: In financial forecasting, an AI can analyze old predictions versus actual outcomes, then adapt its methods for better accuracy.

This reflective process boosts adaptability, reduces repeated mistakes, and helps AI build deeper understanding in complex domains.

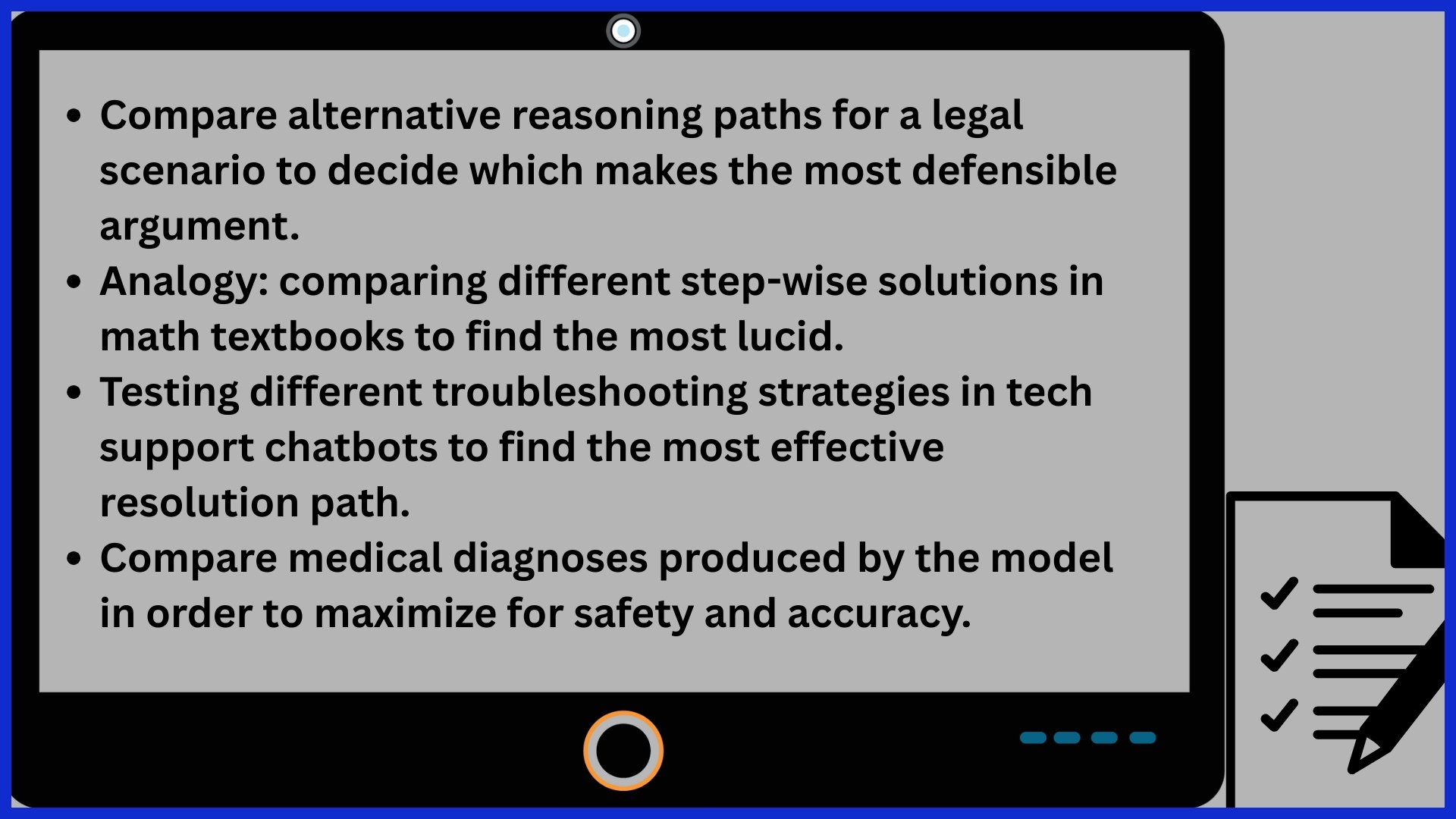

Comparative Analysis

Comparative analysis gives AI the power to compare different strategies and choose the best route, enhancing its reasoning capabilities. This not only exposes what strategies work best in a given context but also aids in uncovering blind spots in the model’s complex reasoning tasks.

Practical CoT Applications

The table below outlines where CoT models excel in complex reasoning tasks.

|

Application Area |

Example Use Cases |

Benefits |

|---|---|---|

|

Mathematics |

Multi-step arithmetic, algebra, and geometry proofs |

Improved accuracy, clearer reasoning steps |

|

Logical Puzzles |

Sudoku, riddles, chess, and escape room challenges |

Enhanced engagement, deeper exploration |

|

Content Creation |

Storytelling, blog posts, article outlines, summaries |

Coherent structure, logical narrative flow |

|

Robotics |

Stepwise decision-making in navigation, task planning |

Reliable, explainable actions |

|

EdTech |

Step-by-step solution generation for learners |

Better understanding, increased transparency |

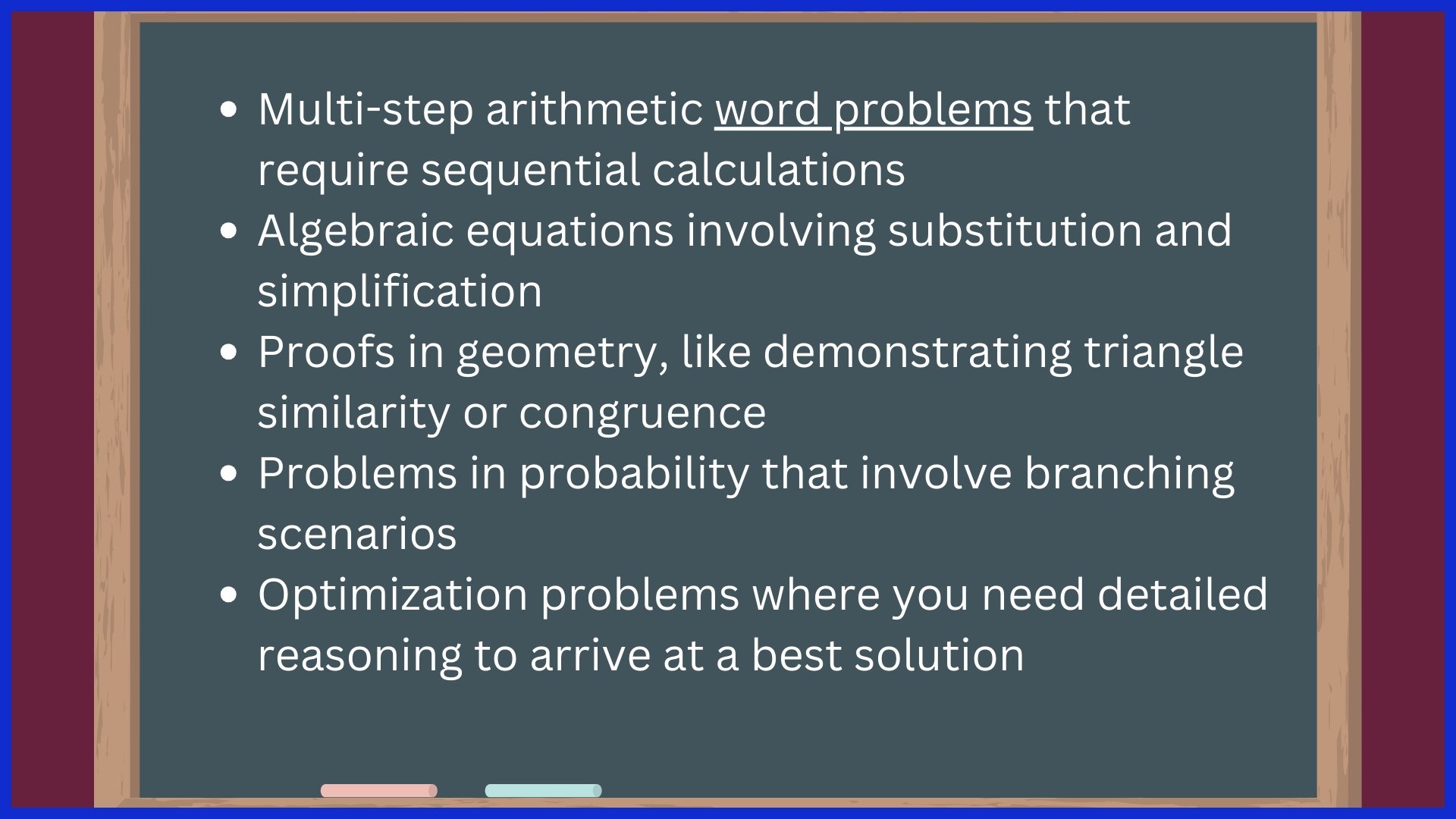

Mathematical Problems

Decomposing arithmetic or algebraic problems into logical steps implies that each calculation or transformation is explicit, not obscured by a mental hop. That’s where CoT shines—no leapfrogging, just sequential A to B to C thinking.

For example, a quadratic equation with CoT means describing the formula, substituting values, finding discriminants, and ultimately calculating roots — all spelled out. This structured approach is indispensable for accuracy: missing a step often means missing the solution entirely.

CoT helps AI explain each move, so learners or users can follow along, not just see the final answer.

Logical Puzzles

Chain-of-Thought (CoT) makes solving puzzles more systematic, not just guesswork. Instead of jumping to answers, the model explains each step—like in Sudoku, chess, or logic grids.

-

It tests strategies, rules out dead ends, and builds toward the solution step by step.

-

Each move is transparent, so you can check the reasoning at any point.

-

Example: In a seating puzzle, CoT follows each clue in order until the full answer is clear.

Content Creation

CoT isn’t just for math or logic—it also powers storytelling and writing. By building ideas step by step, it ensures content flows naturally.

-

Each paragraph or scene connects smoothly to the next.

-

Helps articles, blogs, or stories stay clear, structured, and easy to follow.

-

Example: A CoT-written news piece starts with the event, adds context, explores viewpoints, and ends with a clear summary.

The result: writing that feels purposeful and human, not random.

Conclusion

Chain-of-thought reasoning pulls back the curtain on true problem-solving. Rather than leaping to quick answers, it highlights every step, correction, and insight along the way. The result isn’t just an answer—it’s clarity. Complex questions get broken into manageable parts, producing solutions that are transparent, trustworthy, and easier to refine.

From AI models to business strategies, this approach delivers results you can rely on. For teams, educators, and decision-makers, it proves that structured thinking consistently outperforms guesswork. In a world full of shortcuts and surface-level fixes, CoT shows that taking the time to reason step by step leads to smarter, more dependable outcomes.

At SERPninja.io, we believe the same applies to SEO and digital strategy: success isn’t magic, it’s methodical, transparent, and built step by step. That’s how real growth happens.

Frequently Asked Questions

What is chain of thought reasoning?

Chain of thought reasoning is a technique for problem-solving. It decomposes complicated queries into tiny, rational steps, thereby rendering solutions more understandable and more verifiable.

How does chain of thought reasoning improve problem-solving?

This technique, a form of structured reasoning, assists with explicitly organizing your reasoning capabilities. By advancing incrementally, it minimizes mistakes and enhances decision-making for complex reasoning tasks.

Where is chain of thought reasoning used?

Chain of thought reasoning in education, AI, math, and decision making enhances reasoning capabilities, making solutions clearer and more understandable.

Why is chain of thought reasoning effective?

It encourages structured reasoning and exposition, prompting both humans and machines to walk through their answers and address errors upfront.

Can artificial intelligence use chain of thought reasoning?

Sure, many AI systems utilize reasoning models like chain of thought reasoning, which helps tackle complex reasoning tasks by breaking them into manageable steps.

What are some advanced strategies in chain of thought reasoning?

Expert techniques involve incorporating analogies and structured reasoning prompts, making reasoning more potent and flexible for complex reasoning tasks.

How does chain of thought reasoning support learning?

It assists students in comprehending complicated subjects by demonstrating the progression from one step to the next, thereby enhancing their reasoning abilities and simplifying complex reasoning tasks.